Since I first heard about Microsoft Fabric during private preview, I have spent many hours pondering the workspace topologies available to us. With this workspace based setup it gives us the power to spin up workspaces quickly and easily. But with that power comes great responsibility.

But why is that? As time goes on I can see us struggling from workspace proliferation in exactly the same way that BI has suffered from KPI and report proliferation. That is multiple copies of the same workspace but designed for slightly different purposes. To counter that we need to carefully plan and curate our workspace creation, meaning that we need a set of topologies that we can follow to make our lives easier.

But what is a workspace topology? The best way to think of it is a blueprint for how we're going to create workspaces and link them together.

As I thought about this more and more, three clear topologies began to emerge. I've called these:

- Monolith warehouse

- Hub and Spoke

- Data Mesh

Monolith warehouse

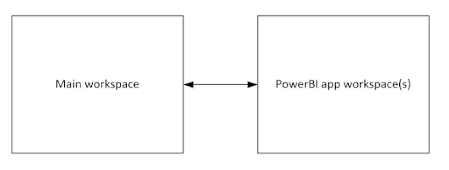

Microsoft Fabric supports the same monolith setup that we've been using for years. By setting up at least two workspaces we can have a 'main workspace' that is used for data engineering tasks (e.g. ELT, Lakehouse/Warehouse, semantic model, etc) and a Power BI app workspace that can be used for distributing content and downstream use cases. In reality this main workspace could either be a Lakehouse/Warehouse split into bronze, silver, and gold layers by schemas - or separate workspaces for each layer of the medallion architecture.

The advantage of this setup is it's familiar to most developers already, quick to deploy, easy to maintain, and easy to replicate to setup dev/test/prod environments. The disadvantage is it can limit your ways of working as you grow and it doesn't offer any level of decoupling between your data source formats and your Lakehouse/Warehouse for reporting. That means if a data format changes in the source system, you are going to need to trace that change all the way through your Warehouse/Lakehouse.

Personally, I'd consider this setup for the SME sector. But for anything bigger, I'd start at a hub and spoke model.

Hub and spoke

In this setup we first land data from our source systems into dedicated workspaces. At this stage we undertake ELT to establish data products based on specific data contracts (e.g. we might have an internal layout for customer data, sales data, etc).

Once we have those data products in our source systems, they are consumed into our Lakehouse/Warehouse workspace to establish our centralised monolith. This then feeds downstream workspaces that are established for a specific purpose such as building predictive sales for marketing, or creating a PowerBI app for exec level reporting.

With this setup changes to upstream systems have limited impact given the Lakehouse/Warehouse are now de-coupled through our internal data products and associated contracts. Instead, as long as the change has limited impact on our data product, the impacts are constrained within our source system workspace. We're also able to future proof ourselves to some degree. As the business grows and technical skills move back to the departments with the domain knowledge, we are able to migrate to a full data mesh topology.

The disadvantage, maintaining this setup over dev/test/prod environments will carry additional overhead and deployments will take longer to action.

My opinion is that this setup is going to be right for most large scale Fabric implementations.

Data mesh

Lastly we have our data mesh. In this setup we extend the hub and spoke model further. Instead of all data products having to go via our Lakehouse/Warehouse, we allow them to bypass it instead. Data products can be shared between source systems and downstream use cases.

Allowing new data products to be developed on the fly, and without the need to involve centralised IT teams in their creation.

Whilst this setup offers the most flexibility, it does come with the highest governance overhead to ensure data products on the mesh are trusted and discoverable.

My opinion is that this sort of setup is likely to be right for a small number of organisations with very specific requirements.

Do you have any thoughts on the above, or more topologies that you think I've missed? Feel free to drop a message in the comments below.

Comments

Post a Comment